0+

Monthly buyers. 54% mid-market and enterprise.

0 Minutes

Buyers spend comparing products

0% of Buyers

Plan to make a purchase decision within 3 months

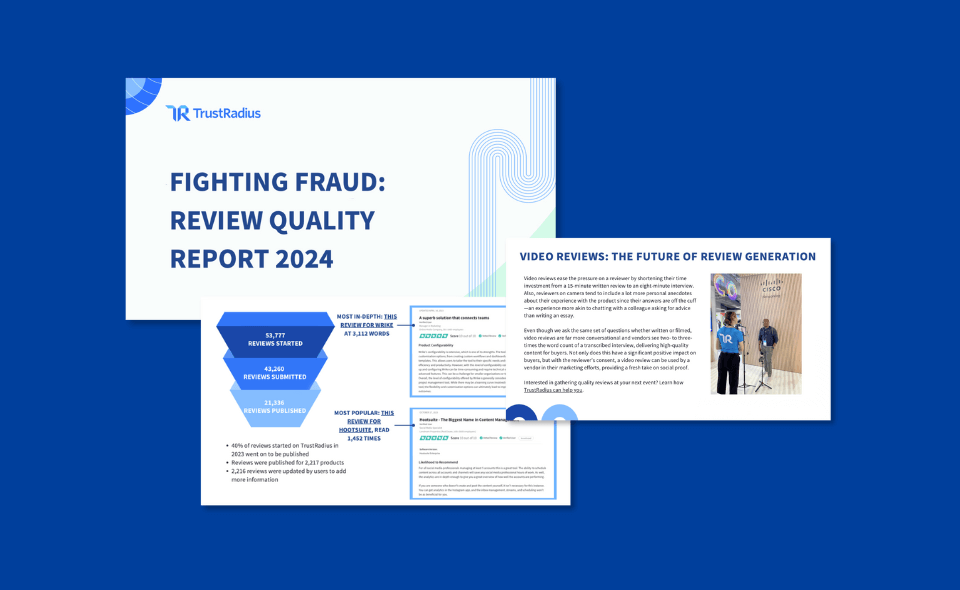

Quality over quantity

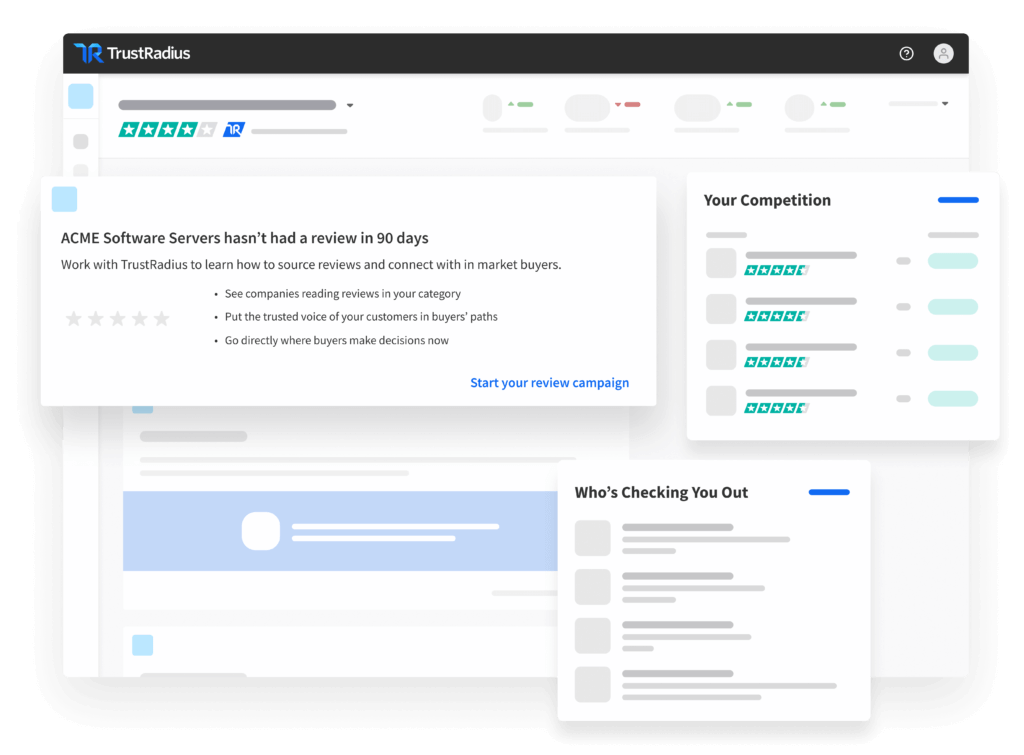

Traditional review sites fail to meet the needs of today's vendors. Superficial reviews, pay-to-play pricing schemes and other issues make it hard to differentiate your solution and engage the right buyers at the right time.

With TrustRadius, you generate more in-depth reviews, 400+ words, that attract in-market buyers.

Drive brand preference

Improve conversions

Generate more downstream intent data for ABM

B2B Marketing Insights

Get the latest news and updates from TrustRadius